Building applications with Large Language Models (LLMs) can quickly become a nightmare of complex code, API calls, and data management. If you’ve ever tried to create even a simple chatbot or AI assistant, you know the struggle. Enter LangChain – the framework that’s revolutionizing how developers build LLM applications.

What is LangChain?

LangChain is an open-source framework designed to simplify the development of applications powered by large language models. Think of it as a toolkit that handles all the heavy lifting when building AI applications, from managing conversations to integrating external tools.

Created by Harrison Chase in 2022, LangChain has quickly become the go to solution for developers who want to build sophisticated LLM applications without drowning in complexity. Whether you’re building chatbots, question-answering systems, or AI agents, LangChain provides the building blocks you need.

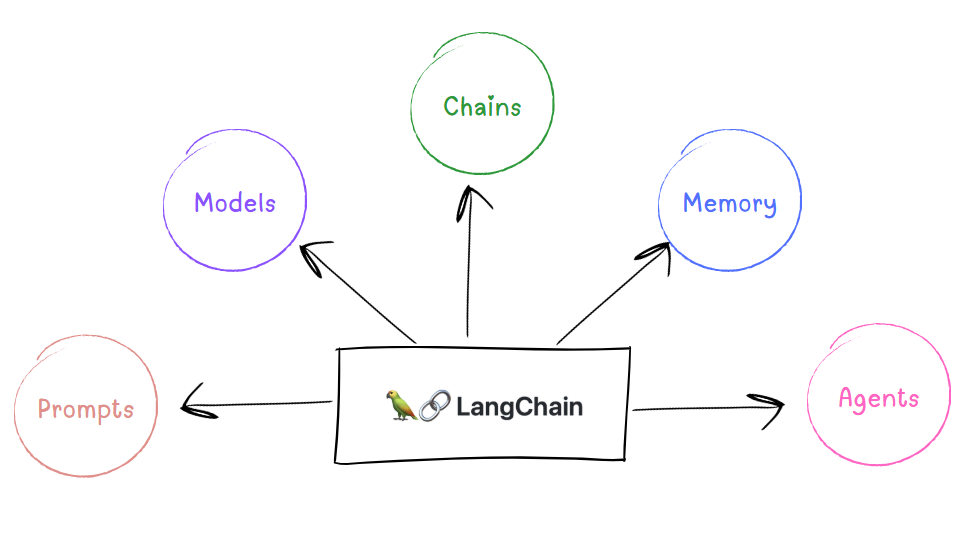

Key Components of LangChain

LangChain consists of several core components that work together seamlessly:

- Prompts: Templates and managers for LLM inputs

- Models: Interfaces for different LLM providers (OpenAI, Anthropic, etc.)

- Chains: Sequences of calls to models and other utilities

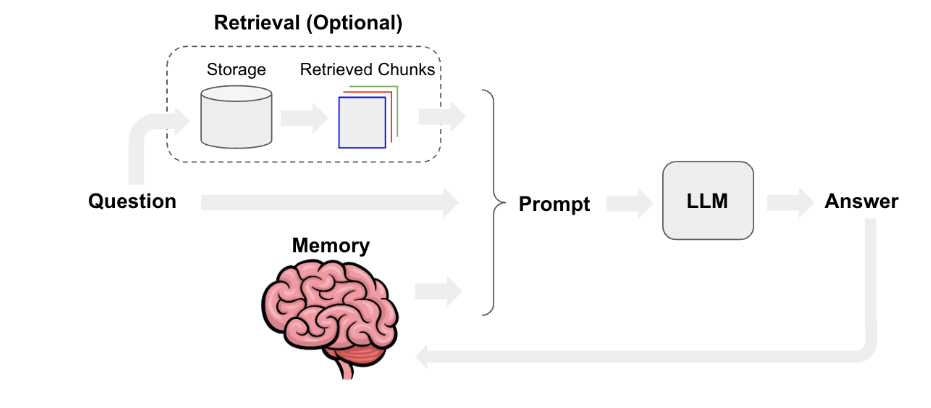

- Memory: Systems for persisting state between chain calls

- Agents: Decision-making entities that use tools to accomplish tasks

Why Do You Need LangChain as a Beginner?

1. Eliminates Boilerplate Code

Without LangChain, building an LLM application means writing hundreds of lines of repetitive code for API calls, error handling, and data formatting. LangChain reduces this to just a few lines of clean, readable code.

2. Handles Complex Workflows Simply

Real-world AI applications rarely involve single API calls. You need to:

- Chain multiple LLM calls together

- Manage conversation history

- Integrate external data sources

- Handle errors gracefully

LangChain makes these complex workflows feel like building with LEGO blocks.

3. Provider Agnostic

Want to switch from OpenAI to Claude or use multiple models? LangChain’s unified interface means you can swap providers with minimal code changes. This flexibility is crucial as the LLM landscape evolves rapidly.

4. Production-Ready Features

LangChain includes built-in features that beginners often overlook but are essential for real applications:

- Memory management for conversational AI

- Error handling and retry logic

- Streaming responses for better user experience

- Cost tracking and optimization tools

Real-World Use Cases: Where LangChain Shines

Conversational AI Chatbots

Build chatbots that remember context and can access external information sources to provide accurate, helpful responses.

Document Question-Answering Systems

Create systems that can read your documents and answer questions about them – perfect for customer support or internal knowledge bases.

AI-Powered Data Analysis

Combine LLMs with your data to generate insights, create reports, and answer business questions in natural language.

Content Generation Pipelines

Automate content creation workflows that involve research, writing, editing, and formatting across multiple steps.

How LangChain Compares to DIY Approaches

Traditional Approach (Without LangChain)

1. Write API wrapper functions

2. Handle authentication and rate limiting

3. Create prompt templates manually

4. Implement conversation memory from scratch

5. Build error handling and retries

6. Manage multiple model providers separately

Result: 500+ lines of complex code

LangChain Approach

1. Import LangChain components

2. Configure your chosen model

3. Define your chain or agent

4. Run your application

Result: 20-30 lines of clean code

Getting Started: Your Next Steps

Ready to dive into LangChain? Here’s your roadmap with a practical example:

1. Set Up Your Environment

- Install Python 3.8+

- Install LangChain: <code>pip install langchain langchain-openai</code>

- Get API keys from your preferred LLM provider

2. Your First LangChain Application

Let’s build a simple AI assistant that can answer questions about any topic. Here’s a complete working example:

from langchain_openai import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.chains import LLMChain

import os

# Set up your OpenAI API key

os.environ["OPENAI_API_KEY"] = "Your-API-Key"

# Create the LLM

llm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0.7)

# Create a prompt template

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful AI assistant. Provide clear, concise answers."),

("user", "Question: {question}")

])

# Create a chain

chain = prompt | llm

# Use the chain

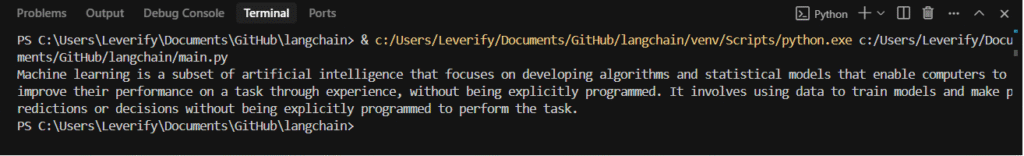

question = "What is machine learning?"

response = chain.invoke({"question": question})

print(response.content)

Output

What This Code Does:

- Creates an OpenAI chat model with LangChain

- Defines a reusable prompt template

- Combines them into a simple chain

- Processes a question and returns an AI response

Try It Yourself:

- Replace “Your-API-Key” with your actual OpenAI API key

- Run the code

- Change the question to see different responses

- Experiment with different temperature values (0.0 = focused, 1.0 = creative)

3. Explore the Documentation

LangChain’s documentation is extensive and beginner-friendly, with plenty of examples and tutorials.

4. Join the Community

The LangChain community is active and helpful – join Discord channels and GitHub discussions for support.

Common Beginner Mistakes to Avoid

Over-engineering from the start: Begin with simple chains and gradually add complexity as needed.

Ignoring cost management: LLM API calls can get expensive quickly. Use LangChain’s built-in tracking tools.

Not testing with different prompts: Small changes in prompts can dramatically affect results. LangChain makes A/B testing easy.

Beyond Basic Chains: Introducing LangGraph

As you become comfortable with LangChain’s linear chains, you might encounter scenarios that need more complex decision-making and branching logic. This is where LangGraph comes in – LangChain’s newer addition for building stateful, graph-based workflows.

What Makes LangGraph Different?

While traditional LangChain chains follow a linear path (A → B → C), LangGraph allows you to create workflows that branch, loop, and make decisions based on context. Think of it as the difference between following a straight highway versus navigating a city with multiple routes and decision points.

LangGraph is perfect for:

- Multi-step reasoning that requires backtracking

- Agent workflows with conditional logic

- Complex decision trees in AI applications

- Iterative processes that need to loop until completion

When to Consider LangGraph

Start with basic LangChain chains for most beginner projects. Consider exploring LangGraph when you need:

- Workflows that change direction based on results

- Multi-agent systems that collaborate

- Applications requiring complex state management

- Advanced reasoning patterns

Why LangChain is Essential for Modern AI Development

LangChain isn’t just another framework – it’s the bridge between complex LLM capabilities and practical, maintainable applications. For beginners, it removes the overwhelming technical barriers that often prevent great ideas from becoming reality.

Whether you’re building your first chatbot or planning a sophisticated AI agent, LangChain gives you the tools to focus on what matters: solving real problems with AI, not wrestling with infrastructure code.

And as your skills grow, LangGraph awaits to handle even more complex workflows and decision-making patterns. The future of AI development is here, and it’s more accessible than ever. With LangChain in your toolkit, you’re ready to build the AI applications you’ve been dreaming about.

Ready to bring AI innovation to your organization? Veritas Analytica specializes in custom AI development and implementation services. Book a meeting with us to discover how we can create tailored solutions for your specific business challenges.