AI, particularly Large Language Models (LLMs), has huge potential. However, the sad reality is that most businesses, especially startups and individuals, can’t afford the hefty price tags attached to accessing these models. In this blog, I will demonstrate how to create your own LLM API at a fraction of the cost.

Most of the LLMs are paid, we can access them using API tokens, which are expensive, especially for new startups and tech newbies.

Some organizations and individuals are using the Llama 3 API using the GROQ. Although it’s a free option and is suitable for small-scale applications and testing, it’s not ideal for large-scale applications because of its token limit.

There is another cost-effective option to utilize the LLMs. Using this option, you can create an API of any LLM from a vast variety of open-source LLMs by deploying them on your hardware. This setup allows you to use the LLMs without any token limitation, especially for a large-scale application.

Although this setup has associated deployment costs, it’s a cost-effective solution in the longer run than token usage by a third party.

In this tutorial, I will guide you on how to create an LLM API using Hugging Face and, most importantly, free of cost.

Let’s get started!

What is Hugging Face?

Hugging Face is an American company that develops computational tools for building applications using machine learning. This company has an amazing platform known as Hugging Face Hub, where you can find 900k models, 200k datasets, and 300k demo apps (Spaces), all open-source and publicly available.

This is the space where everyone can learn, practice, and collaborate to build the technology using Machine Learning.

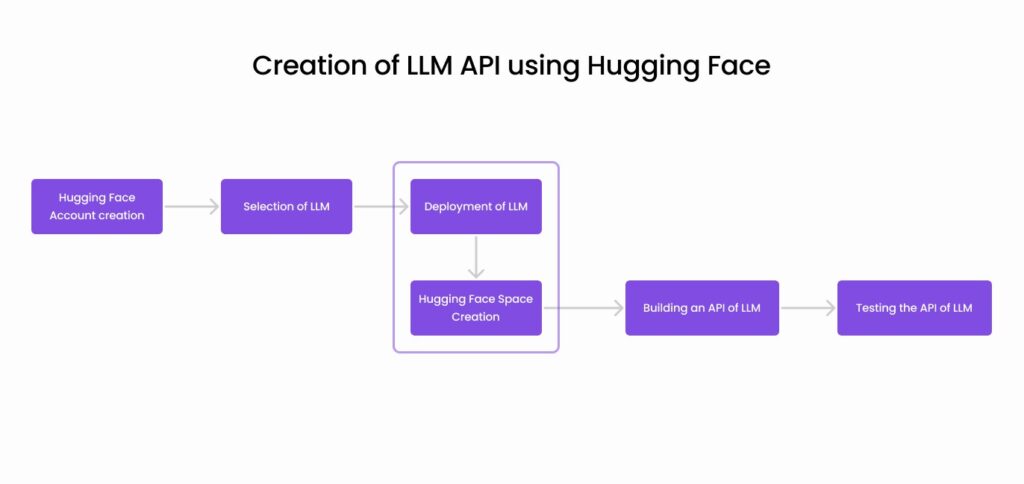

Creation of LLM API using Hugging Face

Here is a glimpse of how we can create an API of LLM using Hugging Face

Step 1: Hugging Face Account creation

Now for the creation of API for any LLM using Hugging Face, we have to first create an account on Hugging Face, which is free and pretty straightforward.

Once you have created an account on Hugging Face. You will see the interface something like this;

Step 2: Selection of LLM

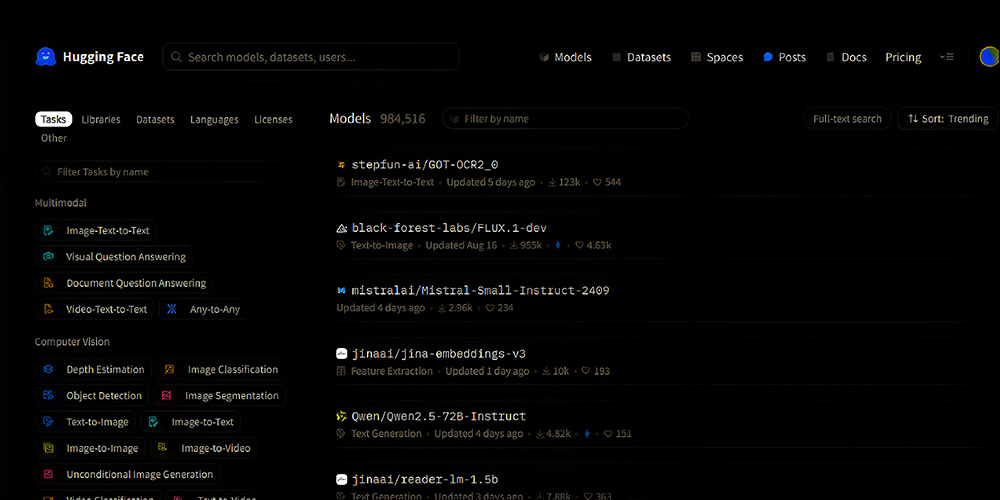

After the creation of a Hugging Face account, you will see an option for Models in the navbar.

Just click on the Models and you will see the complete list of available open-source models.

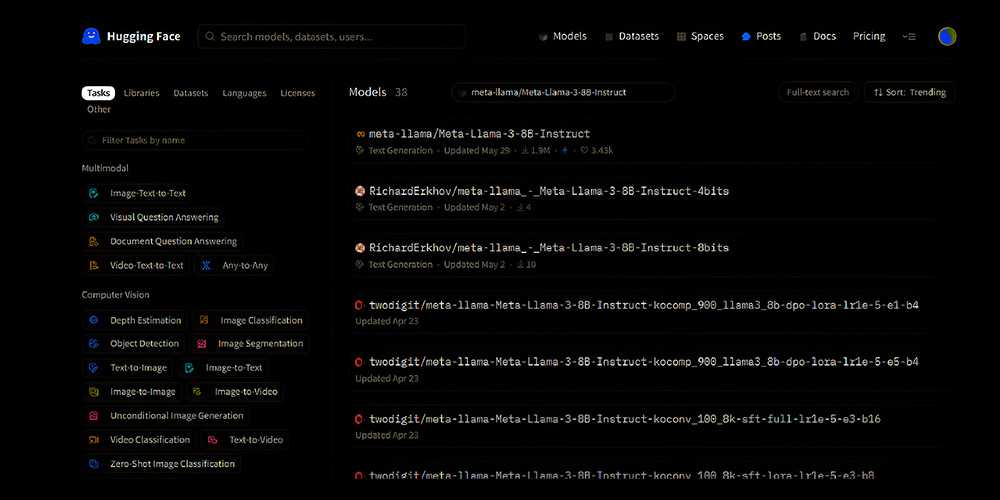

We are going to use this llama3 model for this tutorial.

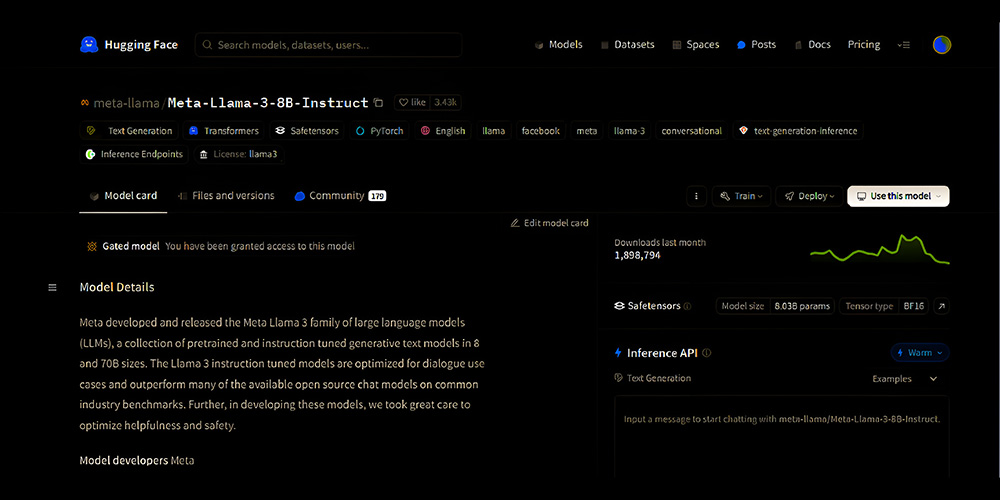

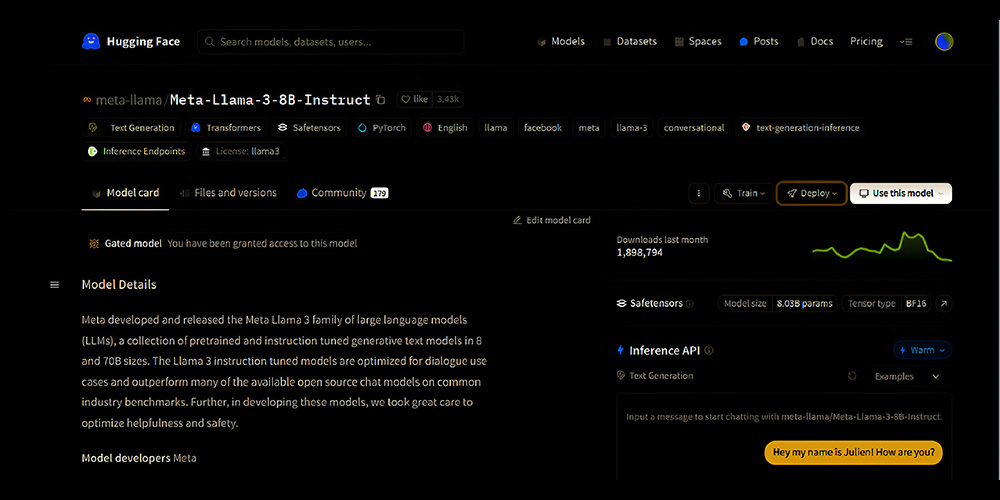

Just simply click on this model meta-llama/Meta-Llama-3-8B-Instruct. Now a detail page will be opened about this model where you can find all the details about this model.

Step 3: Deployment of LLM

The next step is to deploy this LLM. Just click on this deploy button.

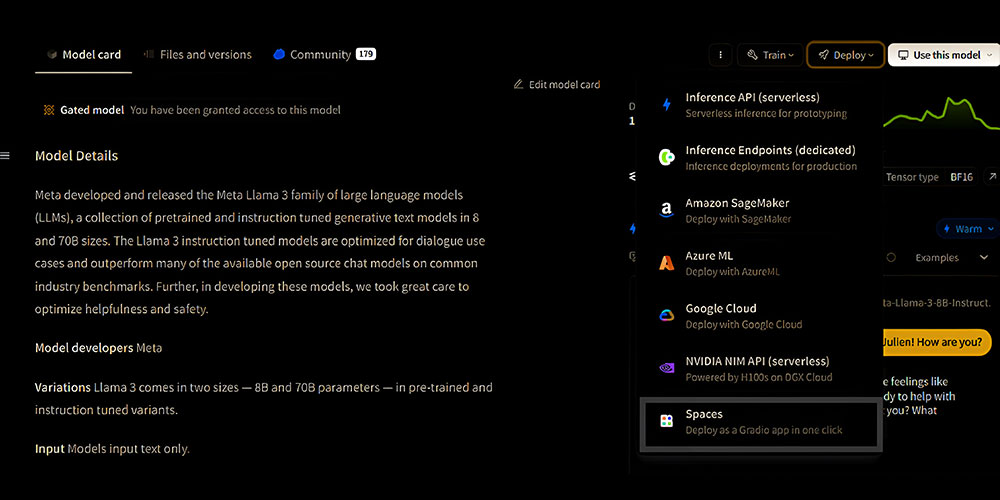

There are many deployment options available in Hugging Face that you can use for the deployment of your LLM.

I am going to use this Spaces option to deploy the LLM in one click as a Gardio App. You can learn more about Hugging Face Spaces from the tutorial on their website.

Click on the option of the Spaces from this dropdown. A pop will open;

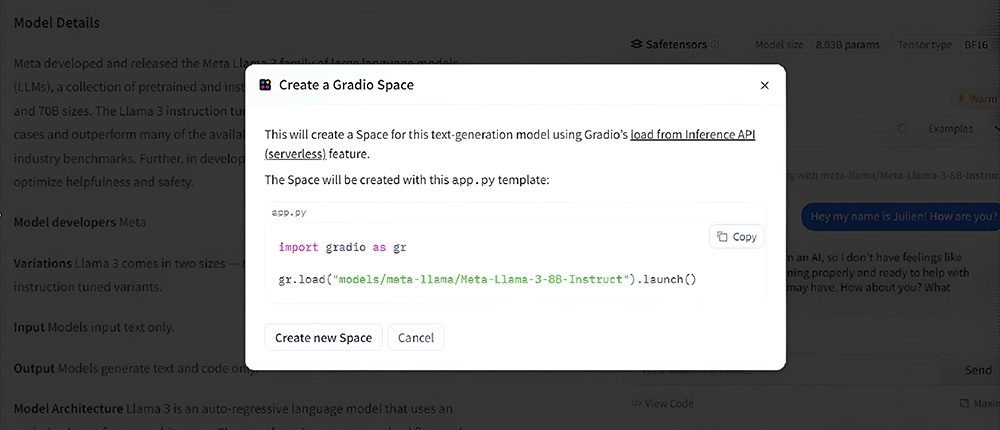

Now, you have to click on Create New Space.

Step 4: Hugging Face Space Creation

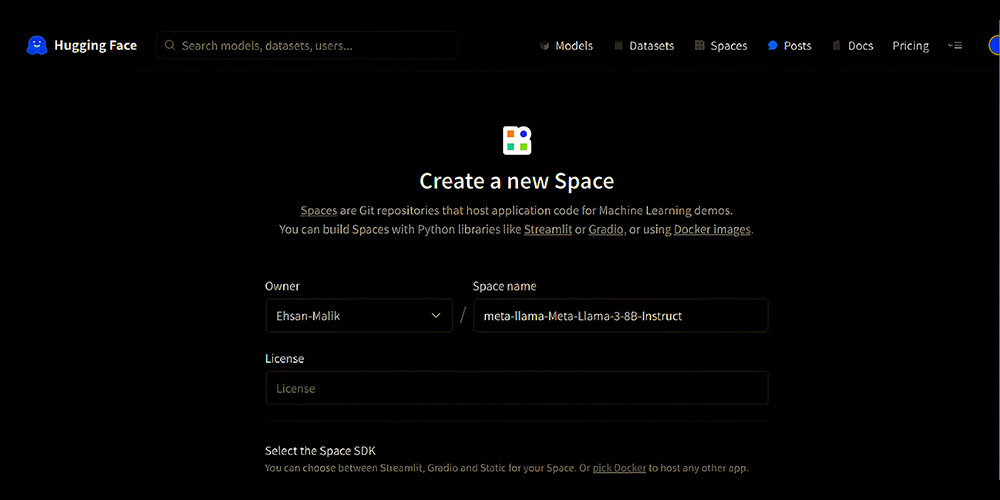

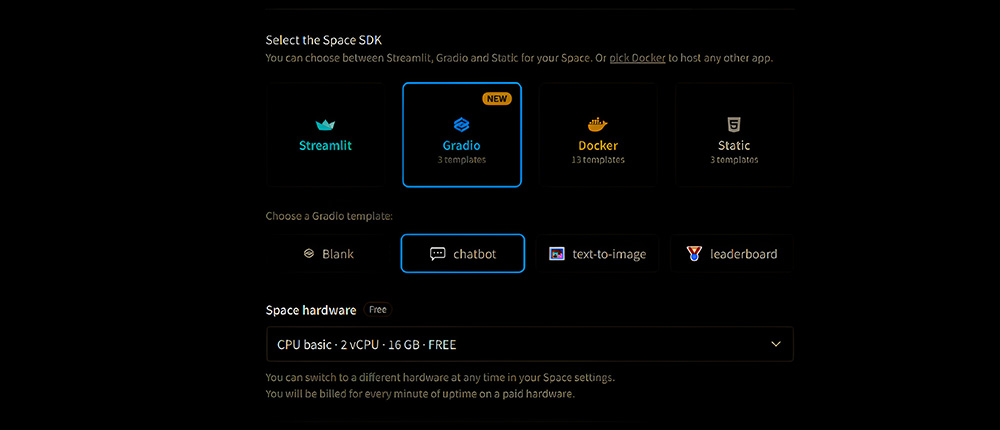

Once you have clicked on Create New Space, a new page will open where you have to add the details about your space.

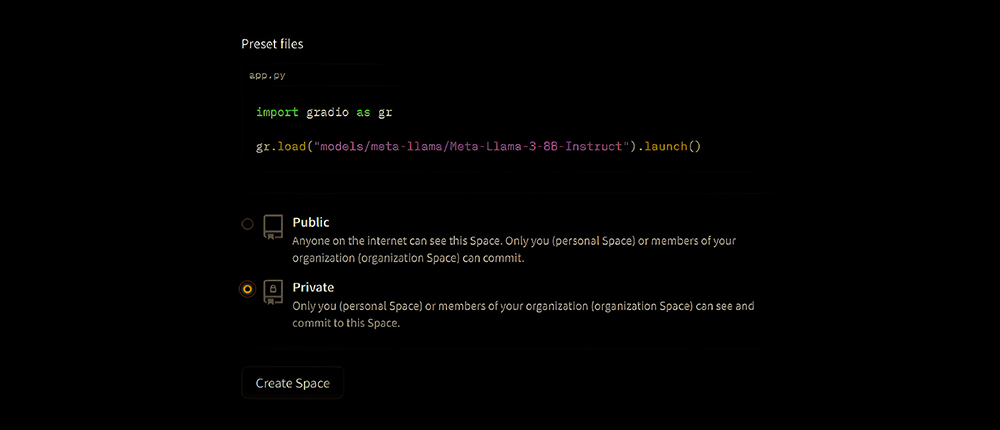

Just keep the name as it is. Select any suitable license from the options available. Select the Gradio from Space SDK options. In Gradio select the chatbot option. Then select any suitable hardware for your LLM. I am going to use the free hardware available.

You can select the visibility of your Space to be public or private as per your need. I am going to make it private.

And last but not least, click on the Create Space button at the end.

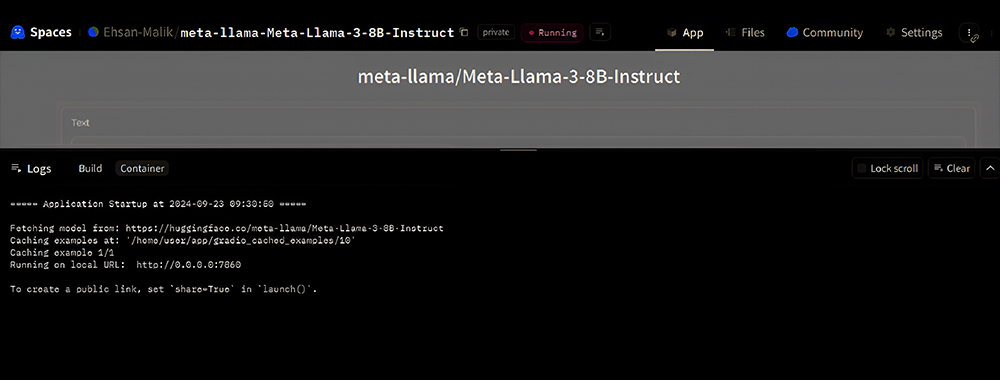

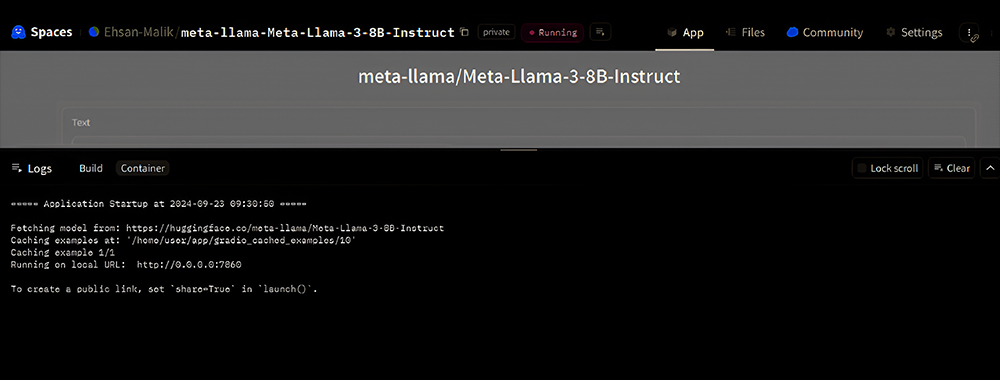

It will take a minute or 2 to build and deploy and finally, your model will be up and running on your own hardware.

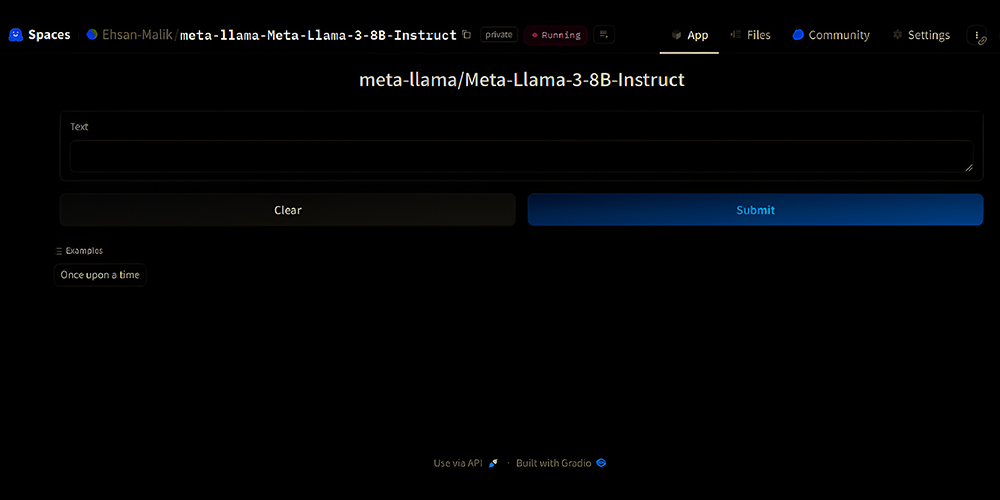

Now click on the X icon in the right and close the logs window. You will see this interface.

Step 5: Building an API of LLM

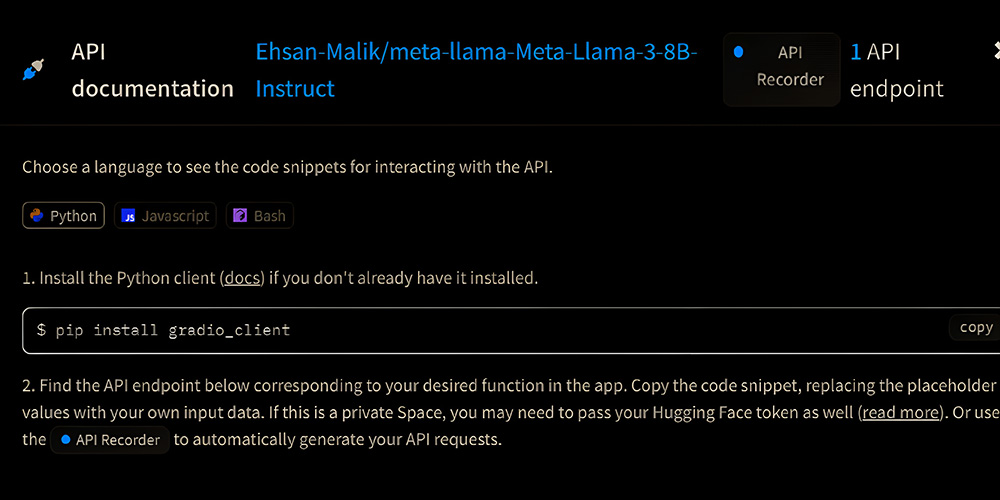

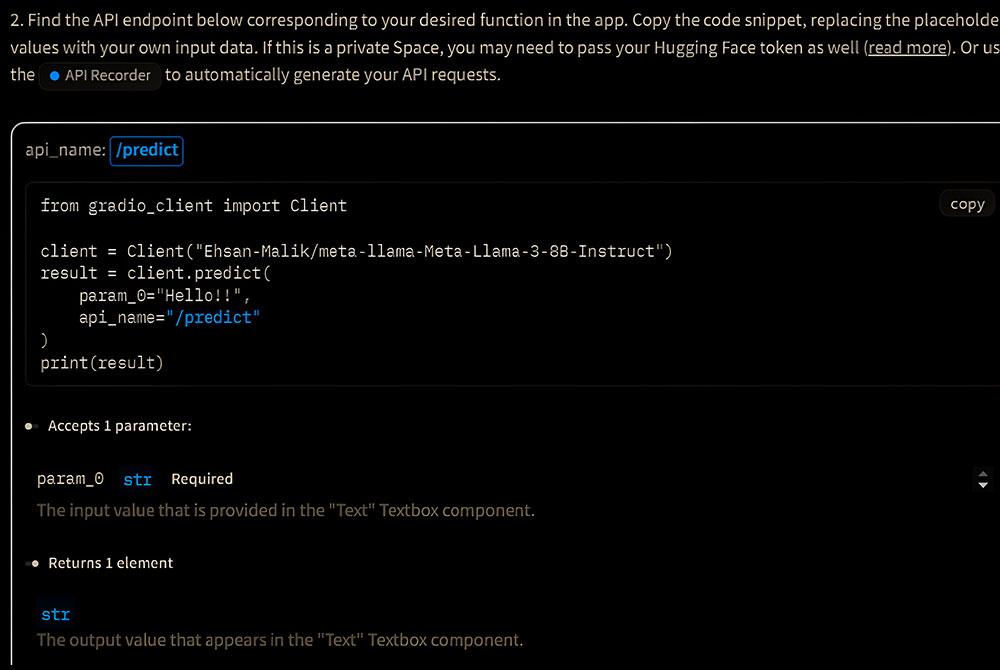

Now you have to click on the Use via API at the bottom of the page and your API is ready to use.

You can find the instructions about your API usage here along with the sample code to test it.

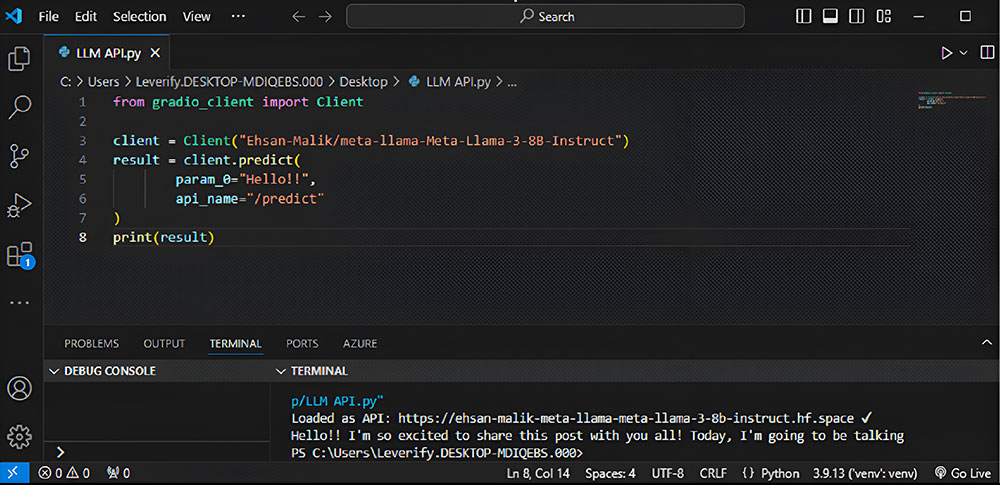

Step 6: Testing the API of LLM

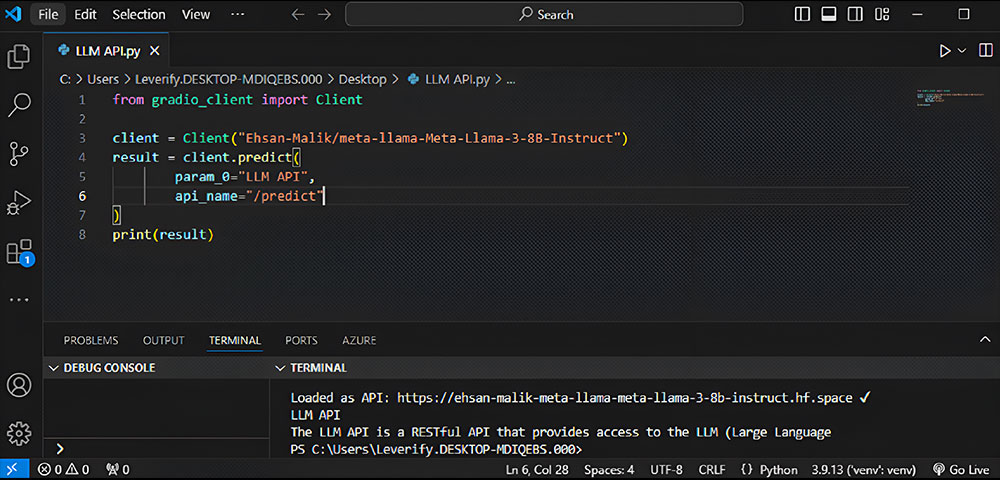

This model is about text completion. It generates/completes the text according to user input. User can input their prompt to the model using the param_0 variable and the model will provide output using the result variable.

Note: Please make sure to change the visibility of Space from private to public in settings before using the API, if you have set it private during space creation.

Sample response of LLM API with input “Hello”

Sample response of LLM API with input “LLM API”

Conclusion:

Creating an API with Hugging Face lets you easily integrate Large Language Models (LLMs) into your projects. With a user-friendly interface, a vast library of pre-trained models, and simple deployment options, it’s the ideal platform for businesses and developers working with LLM applications.

If you are one of those businesses that are ready to take off their growth using LLMs. Veritas Analytica has a dedicated team of Machine Learning experts who can supercharge your LLM advantage. You can book a free consultation with the team.