Large Language Models (LLMs) are trained on massive data and have a profound impact on the way we understand and generate content. Large Language Models (LLMs) like GPT-4 can easily generate novel content, answer user queries, and translate different languages.

There are numerous other advantages of LLMs but they have a major drawback. These LLMs provide responses only based on the data they are trained.

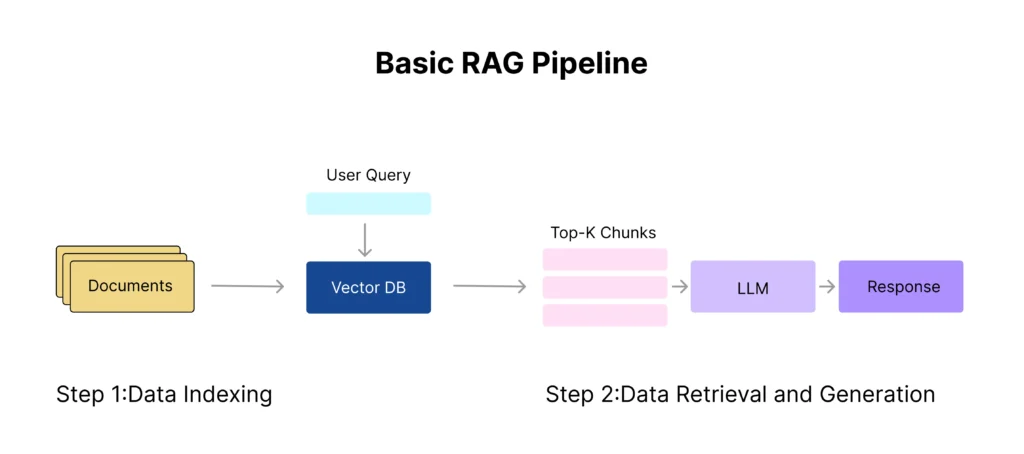

To overcome this limitation we use RAG, an advanced technique in language models which incorporate the external knowledge source in LLM, hence improving its response and factual accuracy.

In this article, we will be implementing the RAG using GPT-4, an advanced language model of OpenAI along with the ObjectBox, an open-source and lightweight vector database. This implementation will allow us to store and retrieve external knowledge and will incorporate the external knowledge in the GPT-4 response to make it more factually accurate and up-to-date.

So, whether you’re an AI enthusiast, a developer, or just someone curious about smarter AI, this step-by-step tutorial is for you!

But before we start coding, let’s break down some key concepts to set the stage for what we’re about to build.

What is Retrieval-Augmented Generation (RAG)?

Retrieval-augmented generation (RAG) is an advanced LLM optimization technique which enables it to provide responses from a custom knowledge base outside its training data. This helps LLMs to generate domain-specific, factually accurate, and context-aware responses without fine-tuning the model.

Benefits of RAG

RAGs provide several benefits to organizations to enhance their LLMs capabilities.

- Cost Effectiveness

Fine-tuning an LLM requires a lot of computational resources, technical expertise, and time. If we take this path, it will consume a lot of resources. On the other hand, if we implement RAG on LLMs, it is a cost-effective solution.

- Improves Credibility

Another major benefit is that it improves the credibility of LLM responses. It provides the proper reference from the knowledge source, making LLM’s response more credible and accurate. If users want to check the authenticity of response, they can check the source of information.

- Accurate information

Without using RAGs, LLM will provide the response from its training data only, which might not have the updated information. When we use RAGs, LLMs provide more accurate responses from the updated data sources incorporated using RAGs.

Why Use ObjectBox?

ObjectBox is an open-source, fast, local-first database designed for instant data retrieval. Unlike cloud databases that introduce lag, ObjectBox keeps everything running locally, making it ideal for real-time AI applications.

Step-by-Step Implementation of RAG with GPT-4 and ObjectBox

Prerequisites

Before we begin, make sure we have:

- An OpenAI API Key to access GPT-4 .

- Python 3.8+ installed.

- ObjectBox Python SDK (pip install objectbox).

- A dataset or document collection for retrieval.

- A code editor like VS Code, Jupyter Notebook, or Google Colab.

1. Install Required Libraries

First of all we have to install the following python libraries which are required for this implementation.

pip install openai pip install objectbox pip install sentence-transformers pip install pandas pip install langchain_objectbox pip install langchain_openai pip install langchain_text_splitters pip install pypdf

2. Import Dependencies

from dotenv import load_dotenv

load_dotenv()

import os

os.environ['OPENAI_API_KEY'] = os.getenv("OPENAI_API_KEY")

from langchain_community.document_loaders import PyPDFLoader

from langchain_objectbox.vectorstores import ObjectBox

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain_openai import OpenAIEmbeddings, ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain.chains import RetrievalQA

from langchain.prompts import PromptTemplate

import pandas as pd

from sentence_transformers import SentenceTransformer

from sklearn.metrics.pairwise import cosine_similarity3. Load PDFs and Prepare Data

pdf_directory = "Datas" # Replace with your folder containing PDFs

def load_pdfs_from_directory(directory_path):

pdf_documents = []

for filename in os.listdir(directory_path):

if filename.endswith(".pdf"):4. Set Up ObjectBox Vector Store

vector = ObjectBox.from_documents(documents, OpenAIEmbeddings(), embedding_dimensions=768)

5. Define LLM and Prompt

llm = ChatOpenAI(model="gpt-4-")

prompt = PromptTemplate(

input_variables=["question", "context"],

template="""You are an AI assistant with expertise in research and technical analysis.

Based on the retrieved information, provide a clear and well-structured response.

Question: {question}

Context: {context}

Answer:"""

)

6. Implement RAG-based Retrieval

qa_chain = RetrievalQA.from_chain_type(

llm,

retriever=vector.as_retriever(),

chain_type_kwargs={"prompt": prompt}

)7. Ask a Question

question = "What are the genetic and environmental factors influencing food allergies?"

result = qa_chain({"query": question })

print(result["result"])Real-World Applications of RAG with GPT-4 and ObjectBox

Now we have understand the implementation of RAG with GPT-4 and ObjectBox for this particular use case. Let’s discuss some other use cases where we can utilize this approach.

Enterprise AI Assistants:

Businesses can use the implementation of RAG to manage and retrieve internal knowledge. This will help them to take advantage of LLMs while keeping the data secure.

Customer Support AI:

We can provide the knowledge about our domain to LLM as an external knowledge base using RAGs, and it will answer the user queries keeping our knowledge base in mind.

Academic Research Tools:

Implementation of RAGs can be extremely beneficial in academic research to Automate literature reviews with accurate references.

Final Thoughts

By combining GPT-4 with RAG and ObjectBox, we can develop smarter, faster, and more reliable AI systems. Unlike traditional AI models that generate responses solely from pre-trained data, RAG enables AI to retrieve and incorporate relevant knowledge from external sources making it ideal for real-world applications.